mmdection_hammer_segmentation

The first time for me to construct a custom dataset and apply it in the mmdection.

The whole pipeline is as follows:

I. Collect and prepare the raw dataset.

II. Convert the raw dataset into COCO style.

III. Add and movify the mask-rcnn configuration in mmdection API to fit our requirement.

IV. Train the model by mmdection.

V. Evaluate and visualize the test dataset.

VI. Modify the result into mask image so that we can insert it smoothly in our pipeline.

Because of the fact that the project is still ongoing, the part I and part VI will NOT be described detailedly.

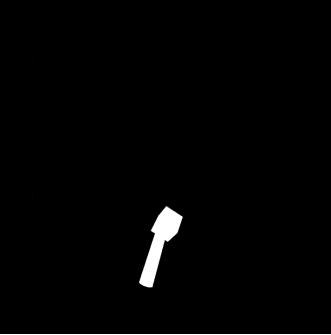

1.The dataset

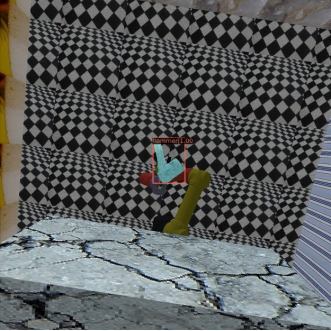

As shown above, it’s a sample from my created dataset which contains a rgb image and corresponding mask. The foreground is a tool(the hammer), and the background contains other unrelated things. At the very beginning when there was no relationship to how to training, it is of top priority to create enough data and split them into train, validate and test dataset. We collected 4535 imgs, 464 imgs and 454 imgs for training, validating and evaluating.

2. The format of COCO

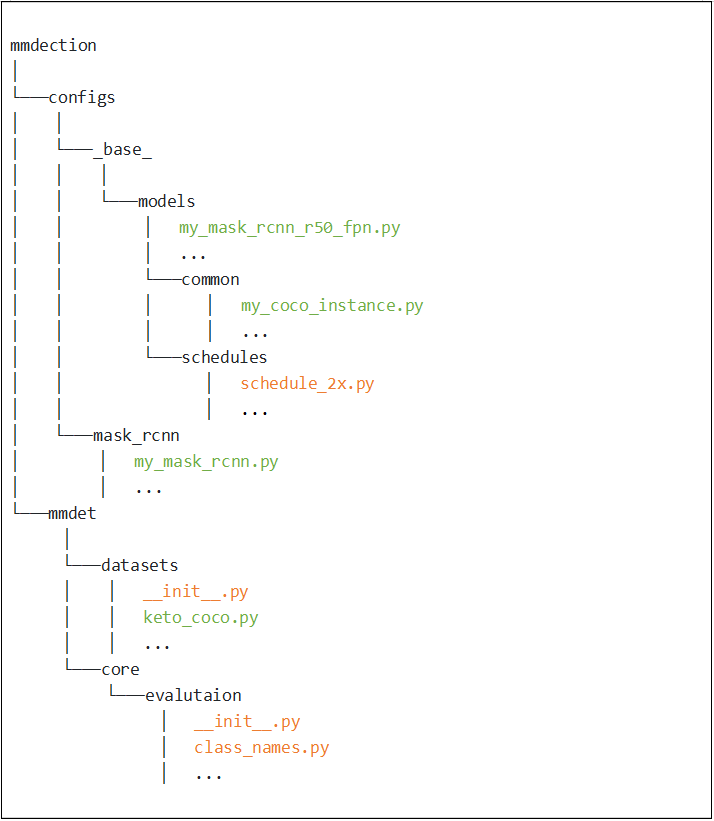

As said by a famous blog, we use COCO to reconstruct our data is not because it is the best format, but it is the most widely used and accepted format. So we need to arrange our dataset in that format. To our relief, there are a lot of tools to help us automatically conduct such procedure, for example, pycococreator.

What we need to do is only to set the related INFO, LICENSES, CATEGORIES and corresponding directory.

1 | INFO = { |

The structure is from the blog. You can refer to it to create your custom dataset. What I want to mention is if we have a lot of mask of different objects on a single image(though not in our current settings), the annotation_id should start from 0 and increase for each mask, while the meaning of image_id is easily understood. By the way, it seems no need to make annotation_id the same as “id” in CATEGORIES, so making it start from zero is necessary.

After running the script of pycococreator, we get a single json file named “instance_train2022.json”. We can use the visualizer script provided in that repo to visualize to check whether we successfully get a COCO json file. COCO will contain the information which provided in the mask.png file using contour algorithm.(Finally, they will be stored in polygons format.)

Since now, we have successfully got our first COCO dataset!

3. MMDection

MMDetection is an open source object detection toolbox based on PyTorch. It is a part of the OpenMMLab project. It provides a abstraction on the PyTorch which enables me train my network without writing any code. Frankly speaking, I will not use it if I have enough time to dig deep in mask-rcnn, cause it makes me tightly rely on the API, which do no good for my career. For now, given limited time, I have to use it.

To use it, I mainly refer to the proceduce in this zhihu blog. But it also contains some frustrating bug, which I will explain below.

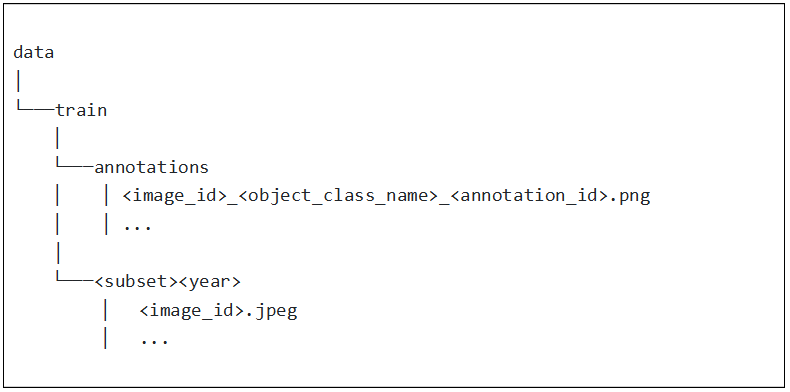

This is the files need to add and modify if we want to add a new dataset to train by mask_rcnn. (marked in green means the newly-added file and marked in brown means the file needed to be modified)

Let’s find how exactly these files works.

mmdection/configs/mask_rcnn/my_mask_rcnn.py

1 | _base_ = [ |

The file just links all the files we added.

Next, we should define our own dataset structure, since we’ve got the dataset in COCO format. We can replicate the coco.py and change a little to get our “keto_coco.py”.

mmdection/mmdet/datasets/coco.py

1 |

|

mmdection/mmdet/datasets/keto_coco.py

1 |

|

As we can see, what we do is just set the CLASSES variable into a list only containing one class hammer. The reason that we change the tuple to list seems to be a version-related problem that the low-level function will misleadingly parse the wrong type.

mmdection/mmdet/datasets/init.py

1 | __all__ = [ |

Since we defined our KpCocoDataset, to make it register into the dataset collection, we need to add the structure name into its init.py and recomplie the module.

In class_names.py, we should also add a simple function to get the classes of our defined dataset.

mmdection/mmdet/core/evaluation/class_name.py

1 | ... |

Also, a change in init.py is necessary. But there is no explicit call of this function, I GUESS the function may not be called or is called by its name in some format.

The my_mask_rcnn_r50_fpn.py is replicated from the mask_rcnn_r50_fpn.py. We just change the class_num parameter 80 to 1.

In my_coco_instance.py, we defined the path of the three COCO json files, and train and test pipeline.

mmdection/configs/common/my_coco_instance.py

1 | ... |

And we will train the data on a single GPU with 2 samples_per_gpu, so we should downsize the learning rate defined in schedule_2x.py 8 times, because it’s default value is assuming the training is on 8 GPUs with 2 samples_per_gpu.

4. Train our model

We can use the following command line to start training process. Though I have spent much time in debugging in the process. If we correctly config the mentioned files, it will finally works.

1 | python tools/train.py configs/mask_rcnn/my_mask_rcnn.py |

When training, we can read the logs to make sure we are on the right track.

1 | 2021-10-27 15:16:22,077 - mmdet - INFO - Epoch [1][2400/9070] lr: 2.500e-03, eta: 17:30:32, time: 0.288, data_time: 0.010, memory: 7875, loss_rpn_cls: 0.0216, loss_rpn_bbox: 0.0081, loss_cls: 0.0925, acc: 97.2070, loss_bbox: 0.1120, loss_mask: 0.1732, loss: 0.4074 |

How are the batches each epoch 9070 calculated? Recall that we set the training set to be repeat dataset 4, which means the total training dataset is 4535 * 4 = 18140. And we configure the training process on a single with samples_per_gpu = 2. So we get batch_size = 18140 / 2 = 9070.

1 | 2021-10-27 22:03:28,363 - mmdet - INFO - Evaluating segm... |

Some results are as shown in the sheet. In this article, we don’t detailedly explain what each statistic means.

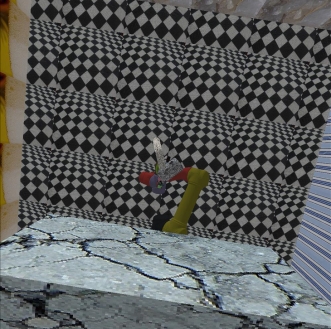

5. Evaluate and visualize our model

We can run the following script to visualize our model.

1 | from mmdet.apis import init_detector, inference_detector, show_result_pyplot |

Thank God we finally successfully train the segmentation network.

mmdection_hammer_segmentation

https://kami-code.com/2021/10/29/mmdection-hammer-segmentation/